Machine learning and natural language processing already play significant roles in eDiscovery. Technology assisted review, contract analysis, audio transcription, language translation, sentiment analysis, and named entity recognition are all uses that have been deployed for years and would not be possible without machine learning. Large language models (LLMs) have already been behind many of CDS’ software offerings. For example:

- Relativity has used LLMs through their TextIQ Privilege Log offering for several years, and recently have added LLM to their sentiment analysis and machine translation offerings in RelativityONE.

- NexLP, now Reveal Data, has used an LLM – MBERT from Google – to generate its pre-trained TAR models since 2019.

- Relativity recently announced a pilot project (in which CDS is participating) to evaluate GPT-4 for identification of relevant documents

Over the next few months and years, the industry will see additional implementations of LLMs in eDiscovery platforms, and time and testing will tell what has the most impact. Here are some FAQs and thoughts from CDS:

Will eDiscovery platforms have chatbots?

Yes, the natural language search capabilities of LLMs will take the form of chatbots in eDiscovery databases. Two chat software have already been released and marketed: Fileread.AI, a legal technology start-up released its first product, a plug-in for RelativityONE that works with GPT-4. CS Disco announced its chatbot Cecelia at LegalTech New York in 2023, and it has been released in select databases. While we know chatbots are coming for eDiscovery databases, there are still some questions surrounding the potential for chatbots in eDiscovery databases.

- Data Security Standards. A large, modern LLM like GPT-4 would not likely sit within the eDiscovery datacenter. Instead, it would most likely be in a cloud services provider like Azure or AWS, and those data security standards will need to be in-line with clients’ data security and retention standards.

- Validation. There is a chance that LLMs might respond with hallucinations or “bad” information. While the responses may be limited to the dataset being analyzed, it’s possible that the chatbot will still incorrectly summarize a document or multiple documents. The need for validation is two-sided – even if the chatbot is providing perfect responses, it can only reply to the query entered by the attorney. Therefore, an incorrect date, misspelled name, or sentence that might be interpreted multiple ways will impact the results.

- Cost. Current pricing for the largest GPT-4 model is $.06 for every 1,000 tokens (about 750 words) input, and $.12 for every thousand tokens output. If entire eDiscovery databases – which range from a few thousand to tens of millions of documents – will be “input” and average documents have at least 750 words, the cost will be significant. However, as the technology improves and competition grows, costs will decline, but at this stage, it might not be a feature that is appropriate in a multi-million document ECA database with only a small fraction of potentially relevant data.

How will this impact search?

Searching in eDiscovery databases is a difficult skill to master, particularly with different platforms all having their own idiosyncratic interfaces. As a result, assisting attorneys with this challenge is one of the most common requests in the litigation support service industry. LLMs, with their ability to understand natural language, stand to have a positive impact, and this feature could be incorporated into eDiscovery software over the next few years. However, it isn’t clear whether natural language search will find its way into the negotiated eDiscovery protocol intended to narrow a large collection to a pool of potentially relevant documents for review. In this type of search, it may always be easier to agree to objective search criteria (e.g., all documents containing both “dog” AND “cat”).

How will LLMs impact Technology Assisted Review (TAR)?

TAR has existed in virtually the same two formats since it emerged as a defensible document review process for large data sets in 2012 after Judge Andrew Peck’s seminal opinion in Da Silva Moore. There are two workflows commonly known as “TAR 1.0” and “TAR 2.0.”

- In a TAR 1.0 workflow, attorneys review a random sample of documents typically known as a “Control Set.” Attorneys then review a few samples of documents, typically about 1,000 to 5,000 in total, used to train the TAR software to distinguish relevant from irrelevant documents. The software generates scores for the unreviewed documents, and using the Control Set for measurement, chooses a cut-off score. Documents above the cut-off score are produced or reviewed, while documents below the cut-off score are discarded.

- In TAR 2.0, the software works the same way, but the review workflow is different. Rather than building a model and then proceeding with review or production, the TAR scores are used to sort the documents. Documents with the highest scores are reviewed first, and the software re-trains itself periodically with newly-reviewed documents. At some point, relevant documents start to run out, and (hopefully) the review can end before the full set has been reviewed manually. A decision to stop is typically made by reviewing an Elusion Sample – a random sample of the remaining documents.

Both workflows use the same traditional supervised machine learning approach to create the predictive models. The software analyzes the text in the relevant and irrelevant examples to identify correlations between words or phrases that more frequently appear in relevant documents, and then generates scores. In 99% of TAR reviews over the last decade, there hasn’t been a “model” until one is built from the example documents reviewed in that matter. In other words, every new review starts from scratch.

In recent years, many software providers have been offering “portable” models to help eliminate the need to start entirely from scratch. Portable models come in several varieties:

- Transferring a predictive model in one case to a new set of documents in a new workspace

- Importing a file with your own words and scores

- Pre-built models provided by the software for common litigation types (e.g. a model that finds contracts, another that finds HR documents and resumes, etc.)

These models can largely be useful for corporate clients that have the same documents and language at stake in repeat litigation. However, they haven’t gained widespread adoption, likely because they don’t singularly achieve the type of recall and precision needed in legal document review. As a result, portable models have mostly been used as a jump start for review while hundreds or thousands of reviewed document examples are still needed to provide value to attorneys.

LLMs might provide a different opportunity since they have already been trained using massive volumes of information scraped from the internet, which gives them the ability to understand natural language. LLMs can also perform data classification, e.g., labeling the topics of news articles and sentiment analysis are frequent classification tasks performed by LLMs. Although legal document review may seem like a different type of classification, the only real difference is the subject matter expertise needed to make the distinction between a relevant and non-relevant document. Therefore, if the LLM can be trained on what a relevant document is, they could be useful for TAR workflows.

The Big Question: How will LLMs be incorporated into TAR workflows?

It remains to be seen how LLMs will be incorporated in TAR workflows. In the meantime, it is possible to replicate the TAR workflow using an existing LLM.

One potential option would be to leave the process alone and use the LLMs in a process called “Fine Tuning.” In this workflow, new documents (the potentially relevant documents for the legal document review) are ingested to the model. A subset of these documents could be reviewed by attorneys and labeled as relevant and not relevant, and a traditional classification algorithm added to create the same type of ranks that have been used in traditional TAR 1.0 and TAR 2.0 workflows. This approach has the advantage of utilizing a workflow that attorneys know will be defensible and accepted by regulators such as the DOJ and FTC in merger reviews. Unfortunately, this approach has some limitations, and two academic studies performed using pre-trained transformers for TAR workflows failed to find substantial improvement over existing classifiers. However, the technology is rapidly improving and LAER AI, a newcomer in the TAR market, performed its own study and found that their models significantly outperformed traditional tools using a traditional TAR workflow.

A second possible workflow would include training the LLM the same way attorneys hired to perform document review are trained. This would involve telling the LLM what is relevant for the review, potentially using a review protocol or natural language search. The LLM could then analyze each document and return with a score or a decision regarding whether or not it is relevant. In this workflow, it would still be possible to provide the software with examples of relevant documents, but it likely wouldn’t be necessary or beneficial to provide more than a few for each issue. Validation could be the same as traditional TAR – the subject matter expert attorneys would review a random sample used to evaluate recall and precision. This workflow has the advantage of requiring much less time reviewing documents to train the software by subject matter experts although it might be even more computationally expensive than fine tuning a model. In addition, it lacks a decade of approvals in court opinions.

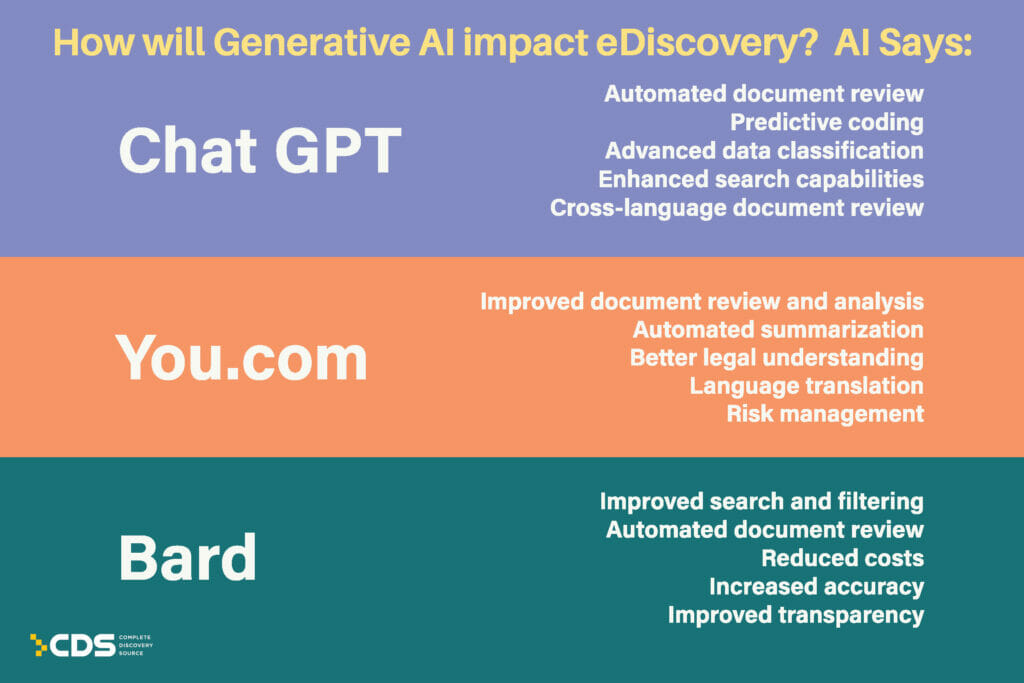

How do the LLMs see their future in eDiscovery?

For fun, we asked three of the top Generative AI chatbots to list the top five ways generative AI and LLMs are most likely to impact eDiscovery. They said:

Want to find out more about how machine learning will impact your eDiscovery processes? CDS provides a full range of advisory services related to AI and eDiscovery workflows. To discuss how we can help your organization understand the benefits and challenges related to machine learning, contact us for a consultation today.